Better table-driven tests (if using Ginkgo)

TL;DR

ginkgo’s table-driven DSL is best avoided. This post shows a better way to do table-driven testing with ginkgo.

Problems with ginkgo’s table-driven testing DSL

In CloudNativePG we have a collection of Kubernetes reconcilers that manage different aspects of PostgreSQL for our custom resource, Cluster, which represents a database cluster.

These reconcilers have a standard signature. For example, for our tablespace reconciler:

result, err := tablespaceReconciler.Reconcile(ctx, reconcile.Request{})

The outputs of a Reconcile() call are simple: an error field, and a

Result

that may call for re-queueing to retry later.

The main action of a reconciler like the above is in its side effects on a

resource. In our case, on a Cluster.

These side-effects have a standard form: the affected resource should have an

updated

Status sub-resource.

In our example above, the Cluster’s Status sub-resource should be updated with

information about the state of the tablespaces we’re managing.

The structure of a Reconcile call, then, is pretty uniform. This makes it a

good fit for table-driven testing. The library we use in CloudNativePG for

testing, ginkgo, has a dedicated DSL for

table-driven testing.

Let’s create a table-driven test with ginkgo. What we want to test is that

given a configuration for tablespaces for a Cluster, the Reconcile will result

in queries back and forth with Postgres, and based on the success or

failure of those queries, a re-queue may or may not be required. The Cluster

Status should be patched with details about the tablespaces.

The ginkgo DSL for table-driven tests consists of a top-level node,

DescribeTable, which takes as its second argument a function that will run the

tests. The following arguments to DescribeTable are a variadic list of

Entry nodes. These nodes contain a series of arguments to be passed to the

testing function.

The testing function should create a tablespaceReconciler built with a mocked

database connection and a fake Kubernetes client, so that we can simulate many

scenarios. It should create a CloudNativePG Cluster, and make it retrievable

using the fake Kubernetes client. Then it should test the Reconcile call by

adding tablespace definitions to the cluster, making sure the expected

interactions with the database occur, and verifying the cluster Status is

updated with the expected state of the tablespaces.

It’s all straightforward, if tedious:

var _ = DescribeTable("tablespace Reconcile",

func(

tablespaceSpec []apiv1.TablespaceConfiguration,

postgresExpectations func(sqlmock.Sqlmock),

shouldRequeue bool,

expectedTablespaceStatus []apiv1.TablespaceState,

) {

ctx := context.TODO()

// Let's create a database Mock with sqlmock

db, dbMock, err := sqlmock.New( [... snipped ...]

// Let's define a Cluster

cluster := &apiv1.Cluster{

ObjectMeta: metav1.ObjectMeta{

Name: "cluster-example",

Namespace: "default",

},

}

// Let's add the tablespaces passed as an argument

cluster.Spec.Tablespaces = tablespaceSpec

// Let's build the fake Kubernetes client and make the Cluster

// available

fakeClient := fake.NewClientBuilder().

WithObjects(cluster). [... snipped ...]

// Let's do some more setup

[...]

// Let's build the tablespace reconciler

tablespaceReconciler := TablespaceReconciler{

instance: &instance,

client: fakeClient,

}

// Let's run `Reconcile`

result, err := tablespaceReconciler.Reconcile(ctx, reconcile.Request{})

Expect(err).ShouldNot(HaveOccurred())

Expect(result).To(BeZero())

// Let's retrieve the updated cluster object

var updatedCluster apiv1.Cluster

err := fakeClient.Get(ctx, client.ObjectKey{

Namespace: cluster.Namespace,

Name: cluster.Name,

}, &updatedCluster)

// Let's check the cluster status

Expect(err).ToNot(HaveOccurred())

Expect(updatedCluster.Status.TablespacesStatus).

To(Equal(expectedTablespaceStatus))

},

[... the Entry objects will go here ...]

Once we have the test scaffold defined, we hit it with a variety of inputs

and expected results to see how Reconcile behaves:

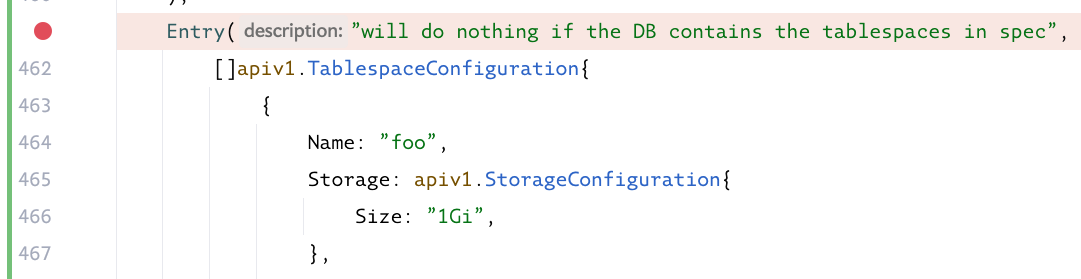

Entry("will do nothing if the DB already contains the tablespaces",

[]apiv1.TablespaceConfiguration{

{

Name: "foo",

Storage: apiv1.StorageConfiguration{

Size: "1Gi",

},

Owner: apiv1.DatabaseRoleRef{

Name: "app",

},

},

},

func(mock sqlmock.Sqlmock) {

// we expect the reconciler to query the DB

// for existing tablespaces

rows := sqlmock.NewRows(

[]string{"spcname", "rolname"}).

AddRow("foo", "app")

mock.ExpectQuery(expectedListStmt).

WillReturnRows(rows)

},

false, // should not re-queue

[]apiv1.TablespaceState{

{

Name: "foo",

Owner: "app",

State: "reconciled",

},

},

),

This is pretty enough (for a test), but there’s something that

bothers me. Those Entry nodes are too generic:

func Entry(description interface{}, args ...interface{}) TableEntry

Go is a statically typed language with an escape route in the form of the empty interface. The empty interface is useful sometimes, but overusing it leads to underpowered static type checking.

What would happen if we forgot an argument in the Entry in our code above?

[PANICKED] Test Panicked

In [It] at: [snipped...]/onsi/ginkgo/v2@v2.20.2/table_dsl.go:[snipped...]

Too few parameters passed in to Table Body function

Forgetting a parameter altogether should not happen that much, I hear you

protest. OK, but how about getting the order of the arguments wrong? That

happens to me all the time. If that happened with the Entry above, we’d get

something like:

[PANICKED] Test Panicked

In [It] at: [snipped...]/onsi/ginkgo/v2@v2.20.2/table_dsl.go:[snipped...]

Incorrect parameters type passed to Table Body function

Entry("will do nothing if the DB already contains the tablespaces",

/Users/[snipped...].go:461

The Table Body function expected parameter #2 to be of type

<func(sqlmock.Sqlmock)> but you passed in <bool>

Gosh, is this a scripting language or what? A panic is not something we

should see in the regular operation of a library. Ugh.

There’s another thing I find annoying about ginkgo’s table DSL: it makes

working with a debugger even more cumbersome. If a particular

Entry is failing, you might think of running a debugger and adding a

breakpoint:

If you step down into the code, you’ll be taken into ginkgo’s DSL implementation

internals, rather than to your test code. You can get around this by setting a

breakpoint at a convenient place in your test function in DescribeTable,

except, this breakpoint will now be hit by other Entry items that you were not

interested in debugging.

Not to worry, ginkgo has the concept of “focus”: you you can rename the Entry

you want to debug into FEntry, and now ginkgo will test only that entry, and

your breakpoint in the test function will behave the way you expected. Just

remember to rename the FEntry back to an Entry once you’re done. You

wouldn’t want to inadvertently stop running your full test suite, right?

I admit there’s ingenuity behind the table-driven testing support in

ginkgo, but in my opinion it’s best avoided.

How should we write the tests then?

A better approach to table-driven tests

The “regular” ginkgo DSL consists mainly of It nodes that specify a test to

be carried out, and Before and After nodes that help do common setup and

tear-down, reducing the boilerplate needed in the It nodes.

If we want to convert our Entry nodes into It nodes, we can do the setup

of sqlmock, of the fake Kubernetes client, and of the Cluster, in

BeforeEach. We can also have some common teardown in AfterEach.

BeforeEach(func() {

// Let's create a database Mock with sqlmock

db, dbMock, err := sqlmock.New( [... snipped ...]

// Let's define a Cluster

cluster := &apiv1.Cluster{

ObjectMeta: metav1.ObjectMeta{

Name: "cluster-example",

Namespace: "default",

},

}

// Let's build the fake Kubernetes client and make the Cluster

// available

fakeClient := fake.NewClientBuilder().

WithObjects(cluster). [... snipped ...]

[...]

})

AfterEach(func() {

Expect(dbMock.ExpectationsWereMet()).To(Succeed())

})

It("will do nothing if the DB already contains the tablespaces",

func(ctx SpecContext) {

initialCluster := cluster.DeepCopy()

cluster.Spec.Tablespaces = []apiv1.TablespaceConfiguration{

{

Name: "foo",

Storage: apiv1.StorageConfiguration{

Size: "1Gi",

},

},

}

err := fakeClient.Patch(ctx, cluster, client.MergeFrom(initialCluster))

Expect(err).NotTo(HaveOccurred())

[...]

})

But now our It node has to Patch the cluster that was created in the

BeforeEach node. We could rewrite the It node to create the Cluster and the fakeClient

completely. Then the Patch would be unnecessary, but that’s a lot of

boilerplate for all our It nodes. There’s no way to dynamically inject

parameters into the code in BeforeEach.

In addition, creating objects in Before nodes forces us to declare

quasi-global variables so that the It nodes can use those objects. I’ve

omitted this from the sample above, which is already clunky enough.

Luckily, Go is a general purpose programming language, so we can tailor how we use ginkgo’s facilities. What was nice about the table-driven code was that the testing function we defined was self-contained, linear, easily readable (even if tedious). There’s nothing stopping us from using that function. Let’s name it so we can reuse it widely:

func assertReconcileTablespaces(

tablespaceSpec []apiv1.TablespaceConfiguration,

postgresExpectations func(sqlmock.Sqlmock),

shouldRequeue bool,

expectedTablespaceStatus []apiv1.TablespaceState,

) {

ctx := context.TODO()

// Let's create a database Mock with sqlmock

db, dbMock, err := sqlmock.New( [... snipped ...]

// Let's define a Cluster

cluster := &apiv1.Cluster{

ObjectMeta: metav1.ObjectMeta{

Name: "cluster-example",

Namespace: "default",

},

}

[...]

Well, we should make it get the context as a parameter rather than

creating it inside, but with just that change, the test function from the

table-driven DSL will do fine.

With assertReconcileTablespaces, we no longer need the Before and After

nodes for setup and teardown.

Our It node could now look like this:

It("will do nothing if the DB already contains the tablespaces",

func(ctx SpecContext) {

assertReconcileTablespaces(ctx,

[]apiv1.TablespaceConfiguration{

{

Name: "foo",

Storage: apiv1.StorageConfiguration{

Size: "1Gi",

},

Owner: apiv1.DatabaseRoleRef{

Name: "app",

},

},

},

func(mock sqlmock.Sqlmock) {

// we expect the reconciler to query the DB

// for existing tablespaces

rows := sqlmock.NewRows(

[]string{"spcname", "rolname"}).

AddRow("foo", "app")

mock.ExpectQuery(expectedListStmt).WillReturnRows(rows)

},

false,

[]apiv1.TablespaceState{

{

Name: "foo",

Owner: "app",

State: "reconciled",

},

},

)

})

Personally I find it difficult to parse function applications when the

arguments are so sprawled. Some other languages have named args

or keyword parameters that can help. In Go, I often end up creating a

struct to pass as an argument to this kind of spread-out function.

I find that the struct makes the code more readable, better scannable from top to bottom (I think Tom Duff might approve). The struct’s field names help to figure out where you are amid the sprawl. Let’s rewrite the test scaffold this way:

// tablespaceTest represents the inputs and expectations for

// a test of the tablespace reconciler

type tablespaceTest struct {

tablespacesInSpec []apiv1.TablespaceConfiguration

postgresExpectations func(sqlmock.Sqlmock)

shouldRequeue bool

expectedTablespaceStatus []apiv1.TablespaceState

}

// assertReconcileTablespaces is the full test, going from setting up

// the mocks and the cluster to running Reconcile() to verifying all

// expectations are met

func assertReconcileTablespaces(

ctx context.Context,

tt tablespaceTest,

) {

And now let’s rewrite the It spec with our new code:

It("will do nothing if the DB already contains the tablespaces",

func(ctx SpecContext) {

assertReconcileTablespaces(ctx, tablespaceTest{

tablespacesInSpec: []apiv1.TablespaceConfiguration{

{

Name: "foo",

Storage: apiv1.StorageConfiguration{

Size: "1Gi",

},

Owner: apiv1.DatabaseRoleRef{

Name: "app",

},

},

},

postgresExpectations: func(mock sqlmock.Sqlmock) {

// we expect the reconciler to query the DB

// for existing tablespaces

rows := sqlmock.NewRows(

[]string{"spcname", "rolname"}).

AddRow("foo", "app")

mock.ExpectQuery(expectedListStmt).

WillReturnRows(rows)

},

shouldRequeue: false,

expectedTablespaceStatus: []apiv1.TablespaceState{

{

Name: "foo",

Owner: "app",

State: "reconciled",

},

},

})

})

I find this test much easier to navigate. Leaving questions of style aside, this code has two significant advantages over the table-driven DSL in ginkgo:

- It’s amenable to static type checking

- It’s friendly to breakpoints and debuggers

I prefer Go’s standard testing package to ginkgo, but ginkgo is in widespread use in Kubernetes projects, so it’s smart to use it, and use it well.